Analyzer Overview

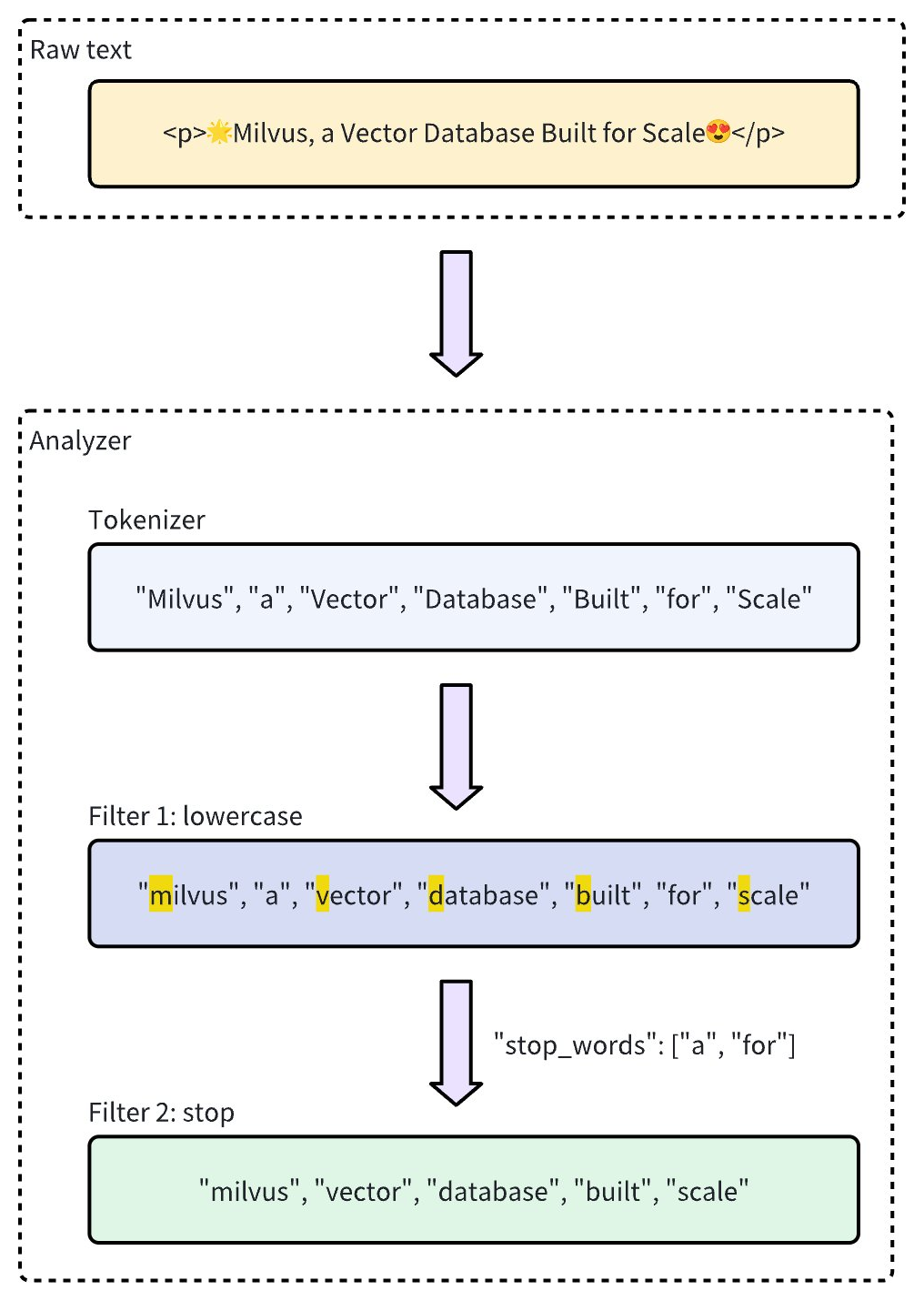

In text processing, an analyzer is a crucial component that converts raw text into a structured, searchable format. Each analyzer typically consists of two core elements: tokenizer and filter. Together, they transform input text into tokens, refine these tokens, and prepare them for efficient indexing and retrieval.

In Zilliz Cloud, analyzers are configured during collection creation when you add VARCHAR fields to the collection schema. Tokens produced by an analyzer can be used to build an index for keyword matching or converted into sparse embeddings for full text search. For more information, refer to Full Text Search or Text Match.

The use of analyzers may impact performance:

Full text search: For full text search, DataNode and QueryNode channels consume data more slowly because they must wait for tokenization to complete. As a result, newly ingested data takes longer to become available for search.

Keyword match: For keyword matching, index creation is also slower since tokenization needs to finish before an index can be built.

Anatomy of an analyzer

An analyzer in Zilliz Cloud consists of exactly one tokenizer and zero or more filters.

-

Tokenizer: The tokenizer breaks input text into discrete units called tokens. These tokens could be words or phrases, depending on the tokenizer type.

-

Filters: Filters can be applied to tokens to further refine them, for example, by making them lowercase or removing common words.

Tokenizers support only UTF-8 format. Support for other formats will be added in future releases.

The workflow below shows how an analyzer processes text.

Analyzer types

Zilliz Cloud provides two types of analyzers to meet different text processing needs:

-

Built-in analyzer: These are predefined configurations that cover common text processing tasks with minimal setup. Built-in analyzers are ideal for general-purpose searches, as they require no complex configuration.

-

Custom analyzer: For more advanced requirements, custom analyzers allow you to define your own configuration by specifying both the tokenizer and zero or more filters. This level of customization is especially useful for specialized use cases where precise control over text processing is needed.

If you omit analyzer configurations during collection creation, Zilliz Cloud uses the

standardanalyzer for all text processing by default. For details, refer to Standard.For optimal search and query performance, choose an analyzer that matches the language of your text data. For instance, while the

standardanalyzer is versatile, it may not be the best choice for languages with unique grammatical structures, such as Chinese, Japanese, or Korean. In such cases, using a language-specific analyzer likechineseor custom analyzers with specialized tokenizers (such aslindera,icu) and filters is highly recommended to ensure accurate tokenization and better search results.

Built-in analyzer

Built-in analyzers in Zilliz Cloud clusters are pre-configured with specific tokenizers and filters, allowing you to use them immediately without needing to define these components yourself. Each built-in analyzer serves as a template that includes a preset tokenizer and filters, with optional parameters for customization.

For example, to use the standard built-in analyzer, simply specify its name standard as the type and optionally include extra configurations specific to this analyzer type, such as stop_words:

- Python

- Java

- NodeJS

- Go

- cURL

analyzer_params = {

"type": "standard", # Uses the standard built-in analyzer

"stop_words": ["a", "an", "for"] # Defines a list of common words (stop words) to exclude from tokenization

}

Map<String, Object> analyzerParams = new HashMap<>();

analyzerParams.put("type", "standard");

analyzerParams.put("stop_words", Arrays.asList("a", "an", "for"));

const analyzer_params = {

"type": "standard", // Uses the standard built-in analyzer

"stop_words": ["a", "an", "for"] // Defines a list of common words (stop words) to exclude from tokenization

};

analyzerParams := map[string]any{"type": "standard", "stop_words": []string{"a", "an", "for"}}

export analyzerParams='{

"type": "standard",

"stop_words": ["a", "an", "for"]

}'

To check the execution result of an analyzer, use the run_analyzer method:

- Python

- Java

- NodeJS

- Go

- cURL

# Sample text to analyze

text = "An efficient system relies on a robust analyzer to correctly process text for various applications."

# Run analyzer

result = client.run_analyzer(

text,

analyzer_params

)

import io.milvus.v2.service.vector.request.RunAnalyzerReq;

import io.milvus.v2.service.vector.response.RunAnalyzerResp;

List<String> texts = new ArrayList<>();

texts.add("An efficient system relies on a robust analyzer to correctly process text for various applications.");

RunAnalyzerResp resp = client.runAnalyzer(RunAnalyzerReq.builder()

.texts(texts)

.analyzerParams(analyzerParams)

.build());

List<RunAnalyzerResp.AnalyzerResult> results = resp.getResults();

// javascrip# Sample text to analyze

const text = "An efficient system relies on a robust analyzer to correctly process text for various applications."

// Run analyzer

const result = await client.run_analyzer({

text,

analyzer_params

});

import (

"context"

"encoding/json"

"fmt"

"github.com/milvus-io/milvus/client/v2/milvusclient"

)

bs, _ := json.Marshal(analyzerParams)

texts := []string{"An efficient system relies on a robust analyzer to correctly process text for various applications."}

option := milvusclient.NewRunAnalyzerOption(texts).

WithAnalyzerParams(string(bs))

result, err := client.RunAnalyzer(ctx, option)

if err != nil {

fmt.Println(err.Error())

// handle error

}

# restful

export MILVUS_HOST="YOUR_CLUSTER_ENDPOINT"

export TEXT_TO_ANALYZE="An efficient system relies on a robust analyzer to correctly process text for various applications."

curl -X POST "http://${MILVUS_HOST}/v2/vectordb/common/run_analyzer" \

-H "Content-Type: application/json" \

-d '{

"text": ["'"${TEXT_TO_ANALYZE}"'"],

"analyzerParams": "{\"type\":\"standard\",\"stop_words\":[\"a\",\"an\",\"for\"]}"

}'

The output will be:

['efficient', 'system', 'relies', 'on', 'robust', 'analyzer', 'to', 'correctly', 'process', 'text', 'various', 'applications']

This demonstrates that the analyzer properly tokenizes the input text by filtering out the stop words "a", "an", and "for", while returning the remaining meaningful tokens.

The configuration of the standard built-in analyzer above is equivalent to setting up a custom analyzer with the following parameters, where tokenizer and filter options are explicitly defined to achieve similar functionality:

- Python

- Java

- NodeJS

- Go

- cURL

analyzer_params = {

"tokenizer": "standard",

"filter": [

"lowercase",

{

"type": "stop",

"stop_words": ["a", "an", "for"]

}

]

}

Map<String, Object> analyzerParams = new HashMap<>();

analyzerParams.put("tokenizer", "standard");

analyzerParams.put("filter",

Arrays.asList("lowercase",

new HashMap<String, Object>() {{

put("type", "stop");

put("stop_words", Arrays.asList("a", "an", "for"));

}}));

const analyzer_params = {

"tokenizer": "standard",

"filter": [

"lowercase",

{

"type": "stop",

"stop_words": ["a", "an", "for"]

}

]

};

analyzerParams = map[string]any{"tokenizer": "standard",

"filter": []any{"lowercase", map[string]any{

"type": "stop",

"stop_words": []string{"a", "an", "for"},

}}}

export analyzerParams='{

"type": "standard",

"filter": [

"lowercase",

{

"type": "stop",

"stop_words": ["a", "an", "for"]

}

]

}'

Zilliz Cloud offers the following built-in analyzers, each designed for specific text processing needs:

-

standard: Suitable for general-purpose text processing, applying standard tokenization and lowercase filtering. -

english: Optimized for English-language text, with support for English stop words. -

chinese: Specialized for processing Chinese text, including tokenization adapted for Chinese language structures.

Custom analyzer

For more advanced text processing, custom analyzers in Zilliz Cloud allow you to build a tailored text-handling pipeline by specifying both a tokenizer and filters. This setup is ideal for specialized use cases where precise control is required.

Tokenizer

The tokenizer is a mandatory component for a custom analyzer, which initiates the analyzer pipeline by breaking down input text into discrete units or tokens. Tokenization follows specific rules, such as splitting by whitespace or punctuation, depending on the tokenizer type. This process allows for more precise and independent handling of each word or phrase.

For example, a tokenizer would convert text "Vector Database Built for Scale" into separate tokens:

["Vector", "Database", "Built", "for", "Scale"]

Example of specifying a tokenizer:

- Python

- Java

- NodeJS

- Go

- cURL

analyzer_params = {

"tokenizer": "whitespace",

}

Map<String, Object> analyzerParams = new HashMap<>();

analyzerParams.put("tokenizer", "whitespace");

const analyzer_params = {

"tokenizer": "whitespace",

};

analyzerParams = map[string]any{"tokenizer": "whitespace"}

export analyzerParams='{

"type": "whitespace"

}'

Filter

Filters are optional components working on the tokens produced by the tokenizer, transforming or refining them as needed. For example, after applying a lowercase filter to the tokenized terms ["Vector", "Database", "Built", "for", "Scale"], the result might be:

["vector", "database", "built", "for", "scale"]

Filters in a custom analyzer can be either built-in or custom, depending on configuration needs.

-

Built-in filters: Pre-configured by Zilliz Cloud, requiring minimal setup. You can use these filters out-of-the-box by specifying their names. The filters below are built-in for direct use:

-

lowercase: Converts text to lowercase, ensuring case-insensitive matching. For details, refer to Lowercase. -

asciifolding: Converts non-ASCII characters to ASCII equivalents, simplifying multilingual text handling. For details, refer to ASCII folding. -

alphanumonly: Retains only alphanumeric characters by removing others. For details, refer to Alphanumonly. -

cnalphanumonly: Removes tokens that contain any characters other than Chinese characters, English letters, or digits. For details, refer to Cnalphanumonly. -

cncharonly: Removes tokens that contain any non-Chinese characters. For details, refer to Cncharonly.

Example of using a built-in filter:

- Python

- Java

- NodeJS

- Go

- cURL

analyzer_params = {

"tokenizer": "standard", # Mandatory: Specifies tokenizer

"filter": ["lowercase"], # Optional: Built-in filter that converts text to lowercase

}Map<String, Object> analyzerParams = new HashMap<>();

analyzerParams.put("tokenizer", "standard");

analyzerParams.put("filter", Collections.singletonList("lowercase"));const analyzer_params = {

"tokenizer": "standard", // Mandatory: Specifies tokenizer

"filter": ["lowercase"], // Optional: Built-in filter that converts text to lowercase

}analyzerParams = map[string]any{"tokenizer": "standard",

"filter": []any{"lowercase"}}export analyzerParams='{

"type": "standard",

"filter": ["lowercase"]

}' -

-

Custom filters: Custom filters allow for specialized configurations. You can define a custom filter by choosing a valid filter type (

filter.type) and adding specific settings for each filter type. Examples of filter types that support customization:-

stop: Removes specified common words by setting a list of stop words (e.g.,"stop_words": ["of", "to"]). For details, refer to Stop. -

length: Excludes tokens based on length criteria, such as setting a maximum token length. For details, refer to Length. -

stemmer: Reduces words to their root forms for more flexible matching. For details, refer to Stemmer.

Example of configuring a custom filter:

- Python

- Java

- NodeJS

- Go

- cURL

analyzer_params = {

"tokenizer": "standard", # Mandatory: Specifies tokenizer

"filter": [

{

"type": "stop", # Specifies 'stop' as the filter type

"stop_words": ["of", "to"], # Customizes stop words for this filter type

}

]

}Map<String, Object> analyzerParams = new HashMap<>();

analyzerParams.put("tokenizer", "standard");

analyzerParams.put("filter",

Collections.singletonList(new HashMap<String, Object>() {{

put("type", "stop");

put("stop_words", Arrays.asList("a", "an", "for"));

}}));const analyzer_params = {

"tokenizer": "standard", // Mandatory: Specifies tokenizer

"filter": [

{

"type": "stop", // Specifies 'stop' as the filter type

"stop_words": ["of", "to"], // Customizes stop words for this filter type

}

]

};analyzerParams = map[string]any{"tokenizer": "standard",

"filter": []any{map[string]any{

"type": "stop",

"stop_words": []string{"of", "to"},

}}}export analyzerParams='{

"type": "standard",

"filter": [

{

"type": "stop",

"stop_words": ["a", "an", "for"]

}

]

}' -

nlohmann::json analyzer_params = {

{"type", "standard"},

{"filter", {{{"type", "stop"}, {"stop_words", {"a", "an", "for"}}}}},

};

Example use

In this example, you will create a collection schema that includes:

-

A vector field for embeddings.

-

Two

VARCHARfields for text processing:-

One field uses a built-in analyzer.

-

The other uses a custom analyzer.

-

Before incorporating these configurations into your collection, you'll verify each analyzer using the run_analyzer method.

Step 1: Initialize MilvusClient and create schema

Begin by setting up the Milvus client and creating a new schema.

- Python

- Java

- NodeJS

- Go

- cURL

from pymilvus import MilvusClient, DataType

# Set up a Milvus client

client = MilvusClient(

uri="YOUR_CLUSTER_ENDPOINT",

token="YOUR_CLUSTER_TOKEN"

)

# Create a new schema

schema = client.create_schema(auto_id=True, enable_dynamic_field=False)

import io.milvus.v2.client.ConnectConfig;

import io.milvus.v2.client.MilvusClientV2;

import io.milvus.v2.common.DataType;

import io.milvus.v2.common.IndexParam;

import io.milvus.v2.service.collection.request.AddFieldReq;

import io.milvus.v2.service.collection.request.CreateCollectionReq;

// Set up a Milvus client

ConnectConfig config = ConnectConfig.builder()

.uri("YOUR_CLUSTER_ENDPOINT")

.token("YOUR_CLUSTER_TOKEN")

.build();

MilvusClientV2 client = new MilvusClientV2(config);

// Create schema

CreateCollectionReq.CollectionSchema schema = CreateCollectionReq.CollectionSchema.builder()

.enableDynamicField(false)

.build();

import { MilvusClient, DataType } from "@zilliz/milvus2-sdk-node";

// Set up a Milvus client

const client = new MilvusClient({

address: "YOUR_CLUSTER_ENDPOINT",

token: "YOUR_CLUSTER_TOKEN"

);

import (

"context"

"fmt"

"github.com/milvus-io/milvus/client/v2/column"

"github.com/milvus-io/milvus/client/v2/entity"

"github.com/milvus-io/milvus/client/v2/index"

"github.com/milvus-io/milvus/client/v2/milvusclient"

)

ctx, cancel := context.WithCancel(context.Background())

defer cancel()

cli, err := milvusclient.New(ctx, &milvusclient.ClientConfig{

Address: "YOUR_CLUSTER_ENDPOINT",

token: "YOUR_CLUSTER_TOKEN"

})

if err != nil {

fmt.Println(err.Error())

// handle err

}

defer client.Close(ctx)

schema := entity.NewSchema().WithAutoID(true).WithDynamicFieldEnabled(false)

# restful

export MILVUS_HOST="YOUR_CLUSTER_ENDPOINT"

export MILVUS_TOKEN="YOUR_CLUSTER_TOKEN"

curl -X POST "http://${MILVUS_HOST}/v2/vectordb/collections/create" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer ${MILVUS_TOKEN}" \

-d '{

"collectionName": "my_collection",

"dimension": 768,

"schema": {

"autoId": true,

"enableDynamicField": false

}

}'

Step 2: Define and verify analyzer configurations

-

Configure and verify a built-in analyzer (

english):-

Configuration: Define the analyzer parameters for the built-in English analyzer.

-

Verification: Use

run_analyzerto check that the configuration produces the expected tokenization.

- Python

- Java

- NodeJS

- Go

- cURL

# Built-in analyzer configuration for English text processing

analyzer_params_built_in = {

"type": "english"

}

# Verify built-in analyzer configuration

sample_text = "Milvus simplifies text analysis for search."

result = client.run_analyzer(sample_text, analyzer_params_built_in)

print("Built-in analyzer output:", result)

# Expected output:

# Built-in analyzer output: ['milvus', 'simplifi', 'text', 'analysi', 'search']Map<String, Object> analyzerParamsBuiltin = new HashMap<>();

analyzerParamsBuiltin.put("type", "english");

List<String> texts = new ArrayList<>();

texts.add("Milvus simplifies text analysis for search.");

RunAnalyzerResp resp = client.runAnalyzer(RunAnalyzerReq.builder()

.texts(texts)

.analyzerParams(analyzerParamsBuiltin)

.build());

List<RunAnalyzerResp.AnalyzerResult> results = resp.getResults();// Use a built-in analyzer for VARCHAR field `title_en`

const analyzer_params_built_in = {

type: "english",

};

const sample_text = "Milvus simplifies text analysis for search.";

const result = await client.run_analyzer({

text: sample_text,

analyzer_params: analyzer_params_built_in

});analyzerParamsBuiltin := map[string]any{"type": "english"}

bs, _ := json.Marshal(analyzerParamsBuiltin)

texts := []string{"Milvus simplifies text analysis for search."}

option := milvusclient.NewRunAnalyzerOption(texts).

WithAnalyzerParams(string(bs))

result, err := client.RunAnalyzer(ctx, option)

if err != nil {

fmt.Println(err.Error())

// handle error

}# restful

export MILVUS_HOST="YOUR_CLUSTER_ENDPOINT"

export SAMPLE_TEXT="Milvus simplifies text analysis for search."

curl -X POST "http://${MILVUS_HOST}/v2/vectordb/common/run_analyzer" \

-H "Content-Type: application/json" \

-d '{

"text": ["'"${SAMPLE_TEXT}"'"],

"analyzerParams": "{\"type\":\"english\"}"

}' -

-

Configure and verify a custom analyzer:

-

Configuration: Define a custom analyzer that uses a standard tokenizer along with a built-in lowercase filter and custom filters for token length and stop words.

-

Verification: Use

run_analyzerto ensure the custom configuration processes text as intended.

- Python

- Java

- NodeJS

- Go

- cURL

# Custom analyzer configuration with a standard tokenizer and custom filters

analyzer_params_custom = {

"tokenizer": "standard",

"filter": [

"lowercase", # Built-in filter: convert tokens to lowercase

{

"type": "length", # Custom filter: restrict token length

"max": 40

},

{

"type": "stop", # Custom filter: remove specified stop words

"stop_words": ["of", "for"]

}

]

}

# Verify custom analyzer configuration

sample_text = "Milvus provides flexible, customizable analyzers for robust text processing."

result = client.run_analyzer(sample_text, analyzer_params_custom)

print("Custom analyzer output:", result)

# Expected output:

# Custom analyzer output: ['milvus', 'provides', 'flexible', 'customizable', 'analyzers', 'robust', 'text', 'processing']// Configure a custom analyzer

Map<String, Object> analyzerParamsCustom = new HashMap<>();

analyzerParamsCustom.put("tokenizer", "standard");

analyzerParamsCustom.put("filter",

Arrays.asList("lowercase",

new HashMap<String, Object>() {{

put("type", "length");

put("max", 40);

}},

new HashMap<String, Object>() {{

put("type", "stop");

put("stop_words", Arrays.asList("of", "for"));

}}

)

);

List<String> texts = new ArrayList<>();

texts.add("Milvus provides flexible, customizable analyzers for robust text processing.");

RunAnalyzerResp resp = client.runAnalyzer(RunAnalyzerReq.builder()

.texts(texts)

.analyzerParams(analyzerParamsCustom)

.build());

List<RunAnalyzerResp.AnalyzerResult> results = resp.getResults();// Configure a custom analyzer for VARCHAR field `title`

const analyzer_params_custom = {

tokenizer: "standard",

filter: [

"lowercase",

{

type: "length",

max: 40,

},

{

type: "stop",

stop_words: ["of", "to"],

},

],

};

const sample_text = "Milvus provides flexible, customizable analyzers for robust text processing.";

const result = await client.run_analyzer({

text: sample_text,

analyzer_params: analyzer_params_custom

});analyzerParamsCustom = map[string]any{"tokenizer": "standard",

"filter": []any{"lowercase",

map[string]any{

"type": "length",

"max": 40,

map[string]any{

"type": "stop",

"stop_words": []string{"of", "to"},

}}}

bs, _ := json.Marshal(analyzerParamsCustom)

texts := []string{"Milvus provides flexible, customizable analyzers for robust text processing."}

option := milvusclient.NewRunAnalyzerOption(texts).

WithAnalyzerParams(string(bs))

result, err := client.RunAnalyzer(ctx, option)

if err != nil {

fmt.Println(err.Error())

// handle error

}# curl

export MILVUS_HOST="YOUR_CLUSTER_ENDPOINT"

export SAMPLE_TEXT="Milvus provides flexible, customizable analyzers for robust text processing."

# 使用自定义分析器配置

curl -X POST "http://${MILVUS_HOST}/v2/vectordb/common/run_analyzer" \

-H "Content-Type: application/json" \

-d '{

"text": ["'"${SAMPLE_TEXT}"'"],

"analyzerParams": "{\"tokenizer\":\"standard\",\"filter\":[\"lowercase\",{\"type\":\"length\",\"max\":40},{\"type\":\"stop\",\"stop_words\":[\"of\",\"for\"]}]}"

}' -

Step 3: Add analyzer to schema field

Now that you have verified your analyzer configurations, add them to your schema fields:

- Python

- Java

- NodeJS

- Go

- cURL

# Add VARCHAR field 'title_en' using the built-in analyzer configuration

schema.add_field(

field_name='title_en',

datatype=DataType.VARCHAR,

max_length=1000,

enable_analyzer=True,

analyzer_params=analyzer_params_built_in,

enable_match=True,

)

# Add VARCHAR field 'title' using the custom analyzer configuration

schema.add_field(

field_name='title',

datatype=DataType.VARCHAR,

max_length=1000,

enable_analyzer=True,

analyzer_params=analyzer_params_custom,

enable_match=True,

)

# Add a vector field for embeddings

schema.add_field(field_name="embedding", datatype=DataType.FLOAT_VECTOR, dim=3)

# Add a primary key field

schema.add_field(field_name="id", datatype=DataType.INT64, is_primary=True)

schema.addField(AddFieldReq.builder()

.fieldName("title_en")

.dataType(DataType.VarChar)

.maxLength(1000)

.enableAnalyzer(true)

.analyzerParams(analyzerParamsBuiltin)

.enableMatch(true) // must enable this if you use TextMatch

.build());

schema.addField(AddFieldReq.builder()

.fieldName("title")

.dataType(DataType.VarChar)

.maxLength(1000)

.enableAnalyzer(true)

.analyzerParams(analyzerParamsCustom)

.enableMatch(true) // must enable this if you use TextMatch

.build());

// Add vector field

schema.addField(AddFieldReq.builder()

.fieldName("embedding")

.dataType(DataType.FloatVector)

.dimension(3)

.build());

// Add primary field

schema.addField(AddFieldReq.builder()

.fieldName("id")

.dataType(DataType.Int64)

.isPrimaryKey(true)

.autoID(true)

.build());

// Create schema

const schema = {

auto_id: true,

fields: [

{

name: "id",

type: DataType.INT64,

is_primary: true,

},

{

name: "title_en",

data_type: DataType.VARCHAR,

max_length: 1000,

enable_analyzer: true,

analyzer_params: analyzerParamsBuiltIn,

enable_match: true,

},

{

name: "title",

data_type: DataType.VARCHAR,

max_length: 1000,

enable_analyzer: true,

analyzer_params: analyzerParamsCustom,

enable_match: true,

},

{

name: "embedding",

data_type: DataType.FLOAT_VECTOR,

dim: 4,

},

],

};

schema.WithField(entity.NewField().

WithName("id").

WithDataType(entity.FieldTypeInt64).

WithIsPrimaryKey(true).

WithIsAutoID(true),

).WithField(entity.NewField().

WithName("embedding").

WithDataType(entity.FieldTypeFloatVector).

WithDim(3),

).WithField(entity.NewField().

WithName("title_en").

WithDataType(entity.FieldTypeVarChar).

WithMaxLength(1000).

WithEnableAnalyzer(true).

WithAnalyzerParams(analyzerParamsBuiltin).

WithEnableMatch(true),

).WithField(entity.NewField().

WithName("title").

WithDataType(entity.FieldTypeVarChar).

WithMaxLength(1000).

WithEnableAnalyzer(true).

WithAnalyzerParams(analyzerParamsCustom).

WithEnableMatch(true),

)

# restful

export SCHEMA_CONFIG='{

"autoId": false,

"enableDynamicField": false,

"fields": [

{

"fieldName": "id",

"dataType": "Int64",

"isPrimary": true

},

{

"fieldName": "title_en",

"dataType": "VarChar",

"elementTypeParams": {

"max_length": "1000",

"enable_analyzer": true,

"analyzer_params": "{\"type\":\"english\"}",

"enable_match": true

}

},

{

"fieldName": "title",

"dataType": "VarChar",

"elementTypeParams": {

"max_length": "1000",

"enable_analyzer": true,

"analyzer_params": "{\"tokenizer\":\"standard\",\"filter\":[\"lowercase\",{\"type\":\"length\",\"max\":40},{\"type\":\"stop\",\"stop_words\":[\"of\",\"for\"]}]}",

"enable_match": true

}

},

{

"fieldName": "embedding",

"dataType": "FloatVector",

"elementTypeParams": {

"dim": "3"

}

}

]

}'

Step 4: Prepare index parameters and create the collection

- Python

- Java

- NodeJS

- Go

- cURL

# Set up index parameters for the vector field

index_params = client.prepare_index_params()

index_params.add_index(field_name="embedding", metric_type="COSINE", index_type="AUTOINDEX")

# Create the collection with the defined schema and index parameters

client.create_collection(

collection_name="my_collection",

schema=schema,

index_params=index_params

)

// Set up index params for vector field

List<IndexParam> indexes = new ArrayList<>();

indexes.add(IndexParam.builder()

.fieldName("embedding")

.indexType(IndexParam.IndexType.AUTOINDEX)

.metricType(IndexParam.MetricType.COSINE)

.build());

// Create collection with defined schema

CreateCollectionReq requestCreate = CreateCollectionReq.builder()

.collectionName("my_collection")

.collectionSchema(schema)

.indexParams(indexes)

.build();

client.createCollection(requestCreate);

// Set up index params for vector field

const indexParams = [

{

name: "embedding",

metric_type: "COSINE",

index_type: "AUTOINDEX",

},

];

// Create collection with defined schema

await client.createCollection({

collection_name: "my_collection",

schema: schema,

index_params: indexParams,

});

console.log("Collection created successfully!");

idx := index.NewAutoIndex(index.MetricType(entity.COSINE))

indexOption := milvusclient.NewCreateIndexOption("my_collection", "embedding", idx)

err = client.CreateCollection(ctx,

milvusclient.NewCreateCollectionOption("my_collection", schema).

WithIndexOptions(indexOption))

if err != nil {

fmt.Println(err.Error())

// handle error

}

export INDEX_PARAMS='[{"fieldName": "embedding", "metricType": "COSINE", "indexType": "AUTOINDEX"}]'

# restful

curl -X POST "YOUR_CLUSTER_ENDPOINT/v2/vectordb/collections/create" \

-H "Content-Type: application/json" \

-d "{

\"collectionName\": \"my_collection\",

\"schema\": ${SCHEMA_CONFIG},

\"indexParams\": ${INDEX_PARAMS}

}"

Example use on the Zilliz Cloud console

You can also use the Zilliz Cloud console to perform the above operations. For details, play the demo below.

What's next

When configuring an analyzer, we recommend reading the following best practice article to determine the most suitable configurations for your use case:

After configuring an analyzer, you can integrate with text retrieval features provided by Zilliz Cloud. For details: