Hosted ModelsPrivate Preview

Zilliz Cloud can host embedding and reranking models on Zilliz-managed infrastructure. You can deploy dedicated, fully managed model instances and use them directly from Zilliz Cloud for stable and high-performance inference.

With a managed model instance, you can insert raw data into a collection. Zilliz Cloud automatically generates vector embeddings with the deployed model during ingestion. For semantic search, you only provide the raw query text. Zilliz Cloud uses the same model to create a query vector, compares it with stored vectors, and returns the most relevant results.

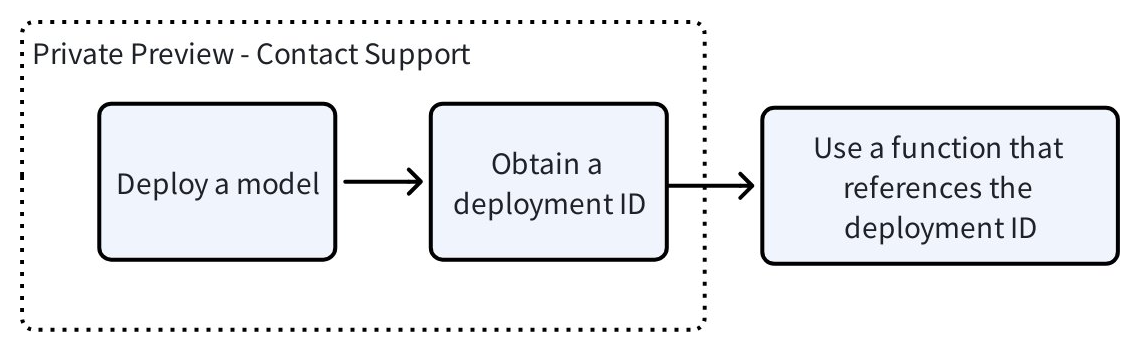

The following diagram shows the procedures for using hosted models.

Deploy a model

Currently, Zilliz Cloud supports the following regions, instance types, and models.

If you have specific requirements for hosted models, please contact us.

Supported regions

The model deployment region should be consistent with your cluster region. Available options include:

Region | Location |

|---|---|

aws-us-west-2 | Oregon, USA |

Supported instance type

The instance type determines the available compute resources. Available options include:

Instance Type | Resources |

|---|---|

g6.xlarge |

|

Supported models

Available options include:

Type | Model |

|---|---|

Embedding | Qwen/Qwen3-Embedding-0.6B |

Qwen/Qwen3-Embedding-4B | |

Qwen/Qwen3-Embedding-8B | |

BAAI/bge-small-en-v1.5 | |

BAAI/bge-small-zh-v1.5 | |

BAAI/bge-base-en-v1.5 | |

BAAI/bge-base-zh-v1.5 | |

BAAI/bge-large-en-v1.5 | |

BAAI/bge-large-zh-v1.5 | |

Reranking | BAAI/bge-reranker-base |

BAAI/bge-reranker-large | |

Qwen/Qwen3-Reranker-0.6B | |

Qwen/Qwen3-Reranker-4B | |

Qwen/Qwen3-Reranker-8B | |

Semantic Highlighter | zilliz/semantic-highlight-bilingual-v1 |

Obtain a deployment ID

Using the information you provide, Zilliz will deploy the model for you which takes about 15 minutes. When the deployment is ready, Zilliz Cloud Support will return a deployment ID, which you will use when creating embedding or reranking functions.

"deploymentId": "68f8889be4b01215a275972a"

Use the deployed model in a function

Once you have the deployment ID, you can create collections that use the deployed model through embedding or reranking functions.

Use an embedding function

-

Create a collection with embedding function.

-

Define at least one

VARCHARfield for the raw text. -

Define at least one vector field for the embedding vectors generated by the model.

-

Set the vector field dimension to match the model’s output dimension.

schema = milvus_client.create_schema()

schema.add_field("id", DataType.INT64, is_primary=True, auto_id=False)

schema.add_field("document", DataType.VARCHAR, max_length=9000)

schema.add_field("dense", DataType.FLOAT_VECTOR, dim=384) # important, the dimension must be supported by the deployed model.

# define embedding function

text_embedding_function = Function(

name="zilliz-bge-small-en-v1.5",

function_type=FunctionType.TEXTEMBEDDING,

input_field_names=["document"], # Scalar field(s) containing text data to embed

output_field_names="dense", # Vector field(s) for storing embeddings

params={

"provider": "zilliz",

"model_deployment_id": "...", # Use the model deployment ID we provide you

"truncation": True, # Optional: if true, inputs greater than the max supported input length of the model will be truncated

"dimension": "384", # Optional: Shorten the output vector dimension, only if supported by the model

}

)

schema.add_function(text_embedding_function)

index_params = milvus_client.prepare_index_params()

index_params.add_index(

field_name="dense",

index_name="dense_index",

index_type="AUTOINDEX",

metric_type="IP",

)

ret = milvus_client.create_collection(collection_name, schema=schema, index_params=index_params, consistency_level="Strong") -

-

Insert raw text data.

Insert only the raw text into the collection. Zilliz Cloud automatically calls the embedding function and populates the vector field.

rows = [

{"id": 1, "document": "Artificial intelligence was founded as an academic discipline in 1956."},

{"id": 2, "document": "Alan Turing was the first person to conduct substantial research in AI."},

{"id": 3, "document": "Born in Maida Vale, London, Turing was raised in southern England."},

]

insert_result = milvus_client.insert(collection_name, rows, progress_bar=True) -

Conduct a similarity search with raw text data.

Provide the query as raw text. Zilliz Cloud generates the query vector using the same model and performs the similarity search.

search_params = {

"params": {"nprobe": 10},

}

queries = ["When was artificial intelligence founded",

"Where was Alan Turing born?"]

result = milvus_client.search(collection_name, data=queries, anns_field="dense", search_params=search_params, limit=3, output_fields=["document"], consistency_level="Strong")

Use a reranking function

You can also configure a reranking function that uses the deployed model to rerank search results.

import numpy as np

rng = np.random.default_rng(seed=19530)

vectors_to_search = rng.random((1, dim))

# define reranking function

ranker = Function(

name="model_rerank_fn",

input_field_names=["document"],

function_type=FunctionType.RERANK,

params={

"reranker": "model",

"provider": "zilliz",

"model_deployment_id": "...", # Use the model deployment ID we provide you,

"queries": ["machine learning for time series"] * len(vectors_to_search), # Query text, the number of query strings must match exactly the number of queries in your search operation

}

)

# Use it during search

result = milvus_client.search(collection_name, vectors_to_search, limit=3, output_fields=["*"], ranker=ranker)

Use a semantic highlighter function

During search, you can use a hosted highlighter model to post-process your search results by highlighting text segments that are semantically related to the user's query.

from pymilvus import SemanticHighlighter

# Define the search query

queries = ["When was artificial intelligence founded"]

# Configure semantic highlighter

highlighter = SemanticHighlighter(

queries,

["document"], # Fields to highlight

pre_tags=["<mark>"], # Tag before highlighted text

post_tags=["</mark>"], # Tag after highlighted text

model_deployment_id="YOUR_MODEL_ID", # Deployed highlight model ID

)

# Perform search with highlighting

results = milvus_client.search(

collection_name,

data=queries,

anns_field="dense",

search_params={"params": {"nprobe": 10}},

limit=3,

output_fields=["document"],

highlighter=highlighter

)

# Process results

for hits in results:

for hit in hits:

highlight = hit.get("highlight", {}).get("document", {})

print(f"ID: {hit['id']}")

print(f"Search Score: {hit['distance']:.4f}") # Vector similarity score

print(f"Fragments: {highlight.get('fragments', [])}")

print(f"Highlight Confidence: {highlight.get('scores', [])}") # Semantic relevance score

print()

Billing

Using hosted models only incurs function and model services charges. Because inference runs within Zilliz Cloud, your data does not traverse the public internet—so you will not incur data transfer charges.

For model unit prices by region, please contact sales.

Cost calculation

Function and Model Services Cost = Model Unit Price x Usage Time

-

Model Unit Price: For details, contact sales.

-

Usage Time: The total time the model deployment is running, measured in hours, regardless of whether the model is actively used.