Milvus Notebooks: Build AI Apps with Ease

Discover the power of the Milvus vector database with interactive notebooks that guide you effortlessly through building cutting-edge GenAI applications.

Top Picks for AI Developers

Multimodal RAG with Milvus

Experience seamless image search with multimodal RAG, utilizing Milvus, Visualized BGE, and GPT-4o for intuitive results and insights.

Read Now

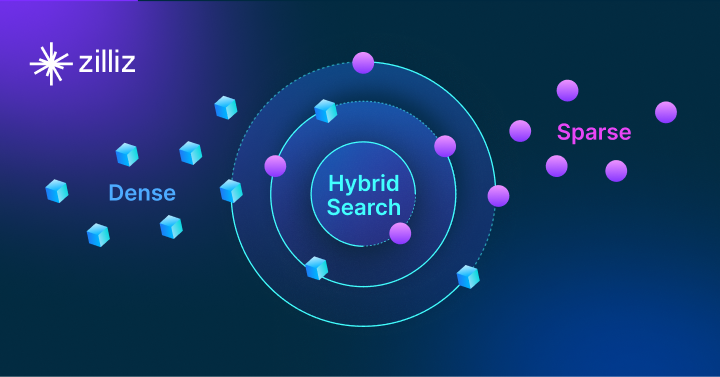

Hybrid Search with Milvus

Learn to improve text search relevance through hybrid techniques using dense and sparse vectors with Milvus and the BGE-M3 model.

Read Now

RAG with Milvus and LlamaIndex

Explore how to build a powerful Retrieval-Augmented Generation system with LlamaIndex and Milvus for enhanced text generation.

Read Now

All Notebooks

| Notebook | Category | Use Cases | Milvus Features |

|---|---|---|---|

| Build RAG with Milvus | LLM | RAG | - |

| Multimodal RAG with Milvus | LLM, Multimodal | RAG, Image/Video Search | Dynamic field |

| Image Search with Milvus | - | Image/Video Search | Dynamic field |

| Hybrid Search with Milvus | - | Text Search | Hybrid Search, Multi-vector |

| RAG with Milvus and LlamaIndex | LLM | RAG | Filtered Search |

| RAG with Milvus and LangChain | LLM | RAG | Filtered Search |

| Multi-Vector Hybrid Search with Milvus | - | Text Search, Image/Video Search | Hybrid Search, Multi-vector |

| Semantic Search with Milvus and OpenAI | Embedding | Text Search | - |

| Question Answering using Milvus and HuggingFace | Embedding | Question Answering | - |

| Use Milvus as a Vector Store in LangChain | Multi-tenancy, LLM | Text Search | Filtered Search, Partition Key |