Metric Types

Similarity metrics are used to measure similarities among vectors. Choosing an appropriate distance metric helps improve classification and clustering performance significantly.

Currently, Zilliz Cloud supports these types of similarity Metrics: Euclidean distance (L2), Inner Product (IP), Cosine Similarity (COSINE), JACCARD, HAMMING, and BM25 (specifically designed for full text search on sparse vectors).

The table below summarizes the mapping between different field types and their corresponding metric types.

Field Type | Dimension Range | Supported Metric Types | Default Metric Type |

|---|---|---|---|

| 2-32,768 |

|

|

| 2-32,768 |

|

|

| 2-32,768 |

|

|

| 2-32,768 |

|

|

| No need to specify the dimension. |

|

|

| 8-32,768*8 |

|

|

For vector fields of the

SPARSE_FLOAT_VECTORtype, use theBM25metric type only when performing full text search. For more information, refer to Full Text Search.For vector fields of the

BINARY_VECTORtype, the dimension value (dim) must be a multiple of 8.

The table below summarizes the characteristics of the similarity distance values of all supported metric types and their value range.

Metric Type | Characteristics of the Similarity Distance Values | Similarity Distance Value Range |

|---|---|---|

| A smaller value indicates a greater similarity. | [0, ∞) |

| A greater value indicates a greater similarity. | [-1, 1] |

| A greater value indicates a greater similarity. | [-1, 1] |

| A smaller value indicates a greater similarity. | [0, 1] |

| Estimates Jaccard similarity from MinHash signature bits; smaller distance = more similar | [0, 1] |

| A smaller value indicates a greater similarity. | [0, dim(vector)] |

| Score the relevance based on the term frequency, inverted document frequency, and document normalization. | [0, ∞) |

Euclidean distance (L2)

Essentially, Euclidean distance measures the length of a segment that connects 2 points.

The formula for Euclidean distance is as follows:

where a = (a0, a1,..., an-1) and b = (b0, b1,..., bn-1) are two points in n-dimensional Euclidean space.

It's the most commonly used distance metric and is very useful when the data are continuous.

Zilliz Cloud only calculates the value before applying the square root when Euclidean distance is chosen as the distance metric.

Inner product (IP)

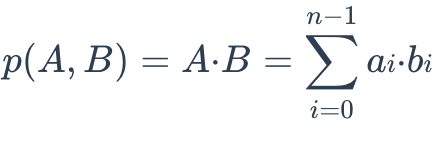

The IP distance between two embeddings is defined as follows:

IP is more useful if you need to compare non-normalized data or when you care about magnitude and angle.

If you use IP to calculate similarities between embeddings, you must normalize your embeddings. After normalization, the inner product equals cosine similarity.

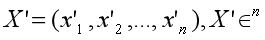

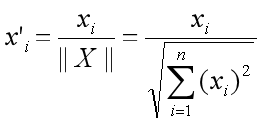

Suppose X' is normalized from embedding X:

The correlation between the two embeddings is as follows:

Cosine similarity

Cosine similarity uses the cosine of the angle between two sets of vectors to measure how similar they are. You can think of the two sets of vectors as line segments starting from the same point, such as [0,0,...], but pointing in different directions.

To calculate the cosine similarity between two sets of vectors A = (a0, a1,..., an-1) and B = (b0, b1,..., bn-1), use the following formula:

The cosine similarity is always in the interval [-1, 1]. For example, two proportional vectors have a cosine similarity of 1, two orthogonal vectors have a similarity of 0, and two opposite vectors have a similarity of -1. The larger the cosine, the smaller the angle between the two vectors, indicating that these two vectors are more similar to each other.

By subtracting their cosine similarity from 1, you can get the cosine distance between two vectors.

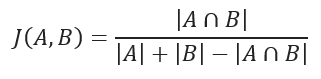

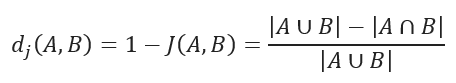

JACCARD distance

JACCARD distance coefficient measures the similarity between two sample sets and is defined as the cardinality of the intersection of the defined sets divided by the cardinality of the union of them. It can only be applied to finite sample sets.

JACCARD distance measures the dissimilarity between data sets and is obtained by subtracting the JACCARD similarity coefficient from 1. For binary variables, JACCARD distance is equivalent to the Tanimoto coefficient.

MHJACCARD

MinHash Jaccard (MHJACCARD) is a metric type used for efficient, approximate similarity search over large sets—such as document word sets, user tag sets, or genomic k-mer sets. Instead of comparing raw sets directly, MHJACCARD compares MinHash signatures, which are compact representations designed to estimate Jaccard similarity efficiently.

This approach is significantly faster than computing exact Jaccard similarity and is especially useful in large-scale or high-dimensional scenarios.

Applicable vector type

BINARY_VECTOR, where each vector stores a MinHash signature. Each element corresponds to the minimum hash value under one of the independent hash functions applied to the original set.

Distance definition

MHJACCARD measures how many positions in two MinHash signatures match. The higher the match ratio, the more similar the underlying sets are.

Zilliz Cloud reports:

- Distance = 1 - estimated similarity (match ratio)

The distance value ranges from 0 to 1:

-

0 means the MinHash signatures are identical (estimated Jaccard similarity = 1)

-

1 means no matches at any position (estimated Jaccard similarity = 0)

For information on technical details, refer to MINHASH_LSH.

HAMMING distance

HAMMING distance measures binary data strings. The distance between two strings of equal length is the number of bit positions at which the bits are different.

For example, suppose there are two strings, 1101 1001 and 1001 1101.

11011001 ⊕ 10011101 = 01000100. Since, this contains two 1s, the HAMMING distance, d (11011001, 10011101) = 2.

BM25 similarity

BM25 is a widely used text relevance measurement method, specifically designed for full text search. It combines the following three key factors:

-

Term Frequency (TF): Measures how frequently a term appears in a document. While higher frequencies often indicate greater importance, BM25 uses the saturation parameter to prevent overly frequent terms from dominating the relevance score.

-

Inverse Document Frequency (IDF): Reflects the importance of a term across the entire corpus. Terms appearing in fewer documents receive a higher IDF value, indicating greater contribution to relevance.

-

Document Length Normalization: Longer documents tend to score higher due to containing more terms. BM25 mitigates this bias by normalizing document lengths, with parameter controlling the strength of this normalization.

The BM25 scoring is calculated as follows:

score(D, Q)=\sum_{i=1}^{n}IDF(q_i)\cdot \{\{TF(q_i,D)\cdot(k_1+1)}\over\{TF(q_i, D)+k_1\cdot(1-b+b\cdot \{\{|D|}\over{avgdl}})}}Parameter description:

-

: The query text provided by the user.

-

: The document being evaluated.

-

: Term frequency, representing how often term appears in document .

-

: Inverse document frequency, calculated as:

IDF(q_i)=\log(\{N-n(q_i)+0.5\over n(q_i)+0.5} + 1)where is the total number of documents in the corpus, and is the number of documents containing term .

-

: Length of document (total number of terms).

-

: Average length of all documents in the corpus.

-

: Controls the influence of term frequency on the score. Higher values increase the importance of term frequency. The typical range is [1.2, 2.0], while Zilliz Cloud allows a range of [0, 3].

-

: Controls the degree of length normalization, ranging from 0 to 1. When the value is 0, no normalization is applied; when the value is 1, full normalization is applied.

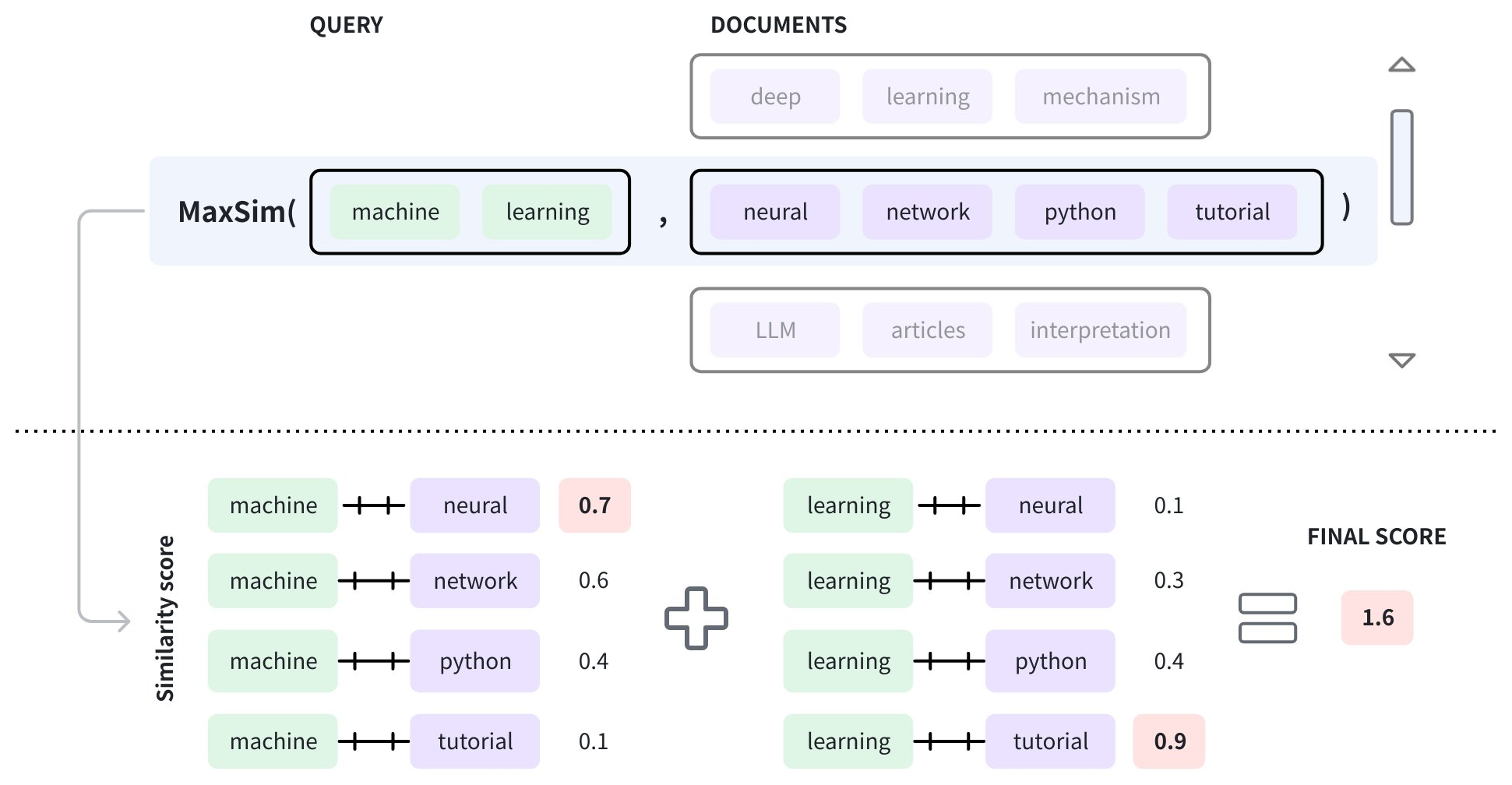

Maximum similarity

The maximum similarity, also known as MAX_SIM, measures the similarity between vector embedding lists instead of plain vector embeddings. The main idea is to split each document into contextual chunks or tokens, create vector embeddings for each of them, and store them as a list of embeddings per document. When a query is received, it is also divided into tokens, and an embedding list is generated accordingly.

The distance or similarity score between the query and the documents is calculated using the above formula, known as the maximum similarity (MAX_SIM). The arguments in the formula are as follows:

-

: The query text provided by the user, which has been split into a vector embedding list, like .

-

: A document being evaluated, which has been divided into a vector embedding list, like .

-

: The i-th vector embedding in the query embedding list.

-

: The j-th vector embedding in the document.

To determine the similarity score between the query and a document, the vector embeddings of each query token are compared with those in the document to get a list of similarity scores. Then the highest scores from all score lists are summed to produce the final score.

In Zilliz Cloud, you can use MAX_SIM to measure the similarity between the query and the documents stored in arrays of structs.

The following table lists the applicable metric types in the MAX_SIM series:

Metric type | Description |

|---|---|

MAX_SIM_L2 | L2 is used to calculate the distance between each query token and each document token, generating multiple score lists, while MAX_SIM determines the final score by summing the highest scores across all score lists. |

MAX_SIM_IP | IP is used to calculate the distance between each query token and each document token, generating multiple score lists, while MAX_SIM determines the final score by summing the highest scores from all score lists. |

MAX_SIM_COSINE | COSINE is used to calculate the distance between each query token and each document token, generating multiple score lists, while MAX_SIM determines the final score by summing the highest scores from all score lists. |