Function & Model Inference Overview

Zilliz Cloud provides a unified search architecture for building modern retrieval systems, including semantic search, lexical search, hybrid search, and intelligent reranking. Rather than exposing these capabilities as isolated features, Zilliz Cloud organizes them around a single core abstraction: the Function.

What is a Function?

In Zilliz Cloud, a Function is a configurable execution unit that applies a specific operation at a defined stage of the search workflow.

A Function answers three practical questions:

-

When does this operation run? Before search or after search.

-

What input does it operate on? Raw text, vector representations or retrieved candidate results.

-

What output does it produce? Vector embeddings used for retrieval, or reordered results returned to the user.

From a workflow perspective, Functions participate in search in two distinct stages:

-

Pre-search: Functions run before search to convert text into vector representations. These vectors determine which candidates are retrieved.

-

Post-search: Functions run after candidate retrieval to refine the ordering of results without changing the candidate set.

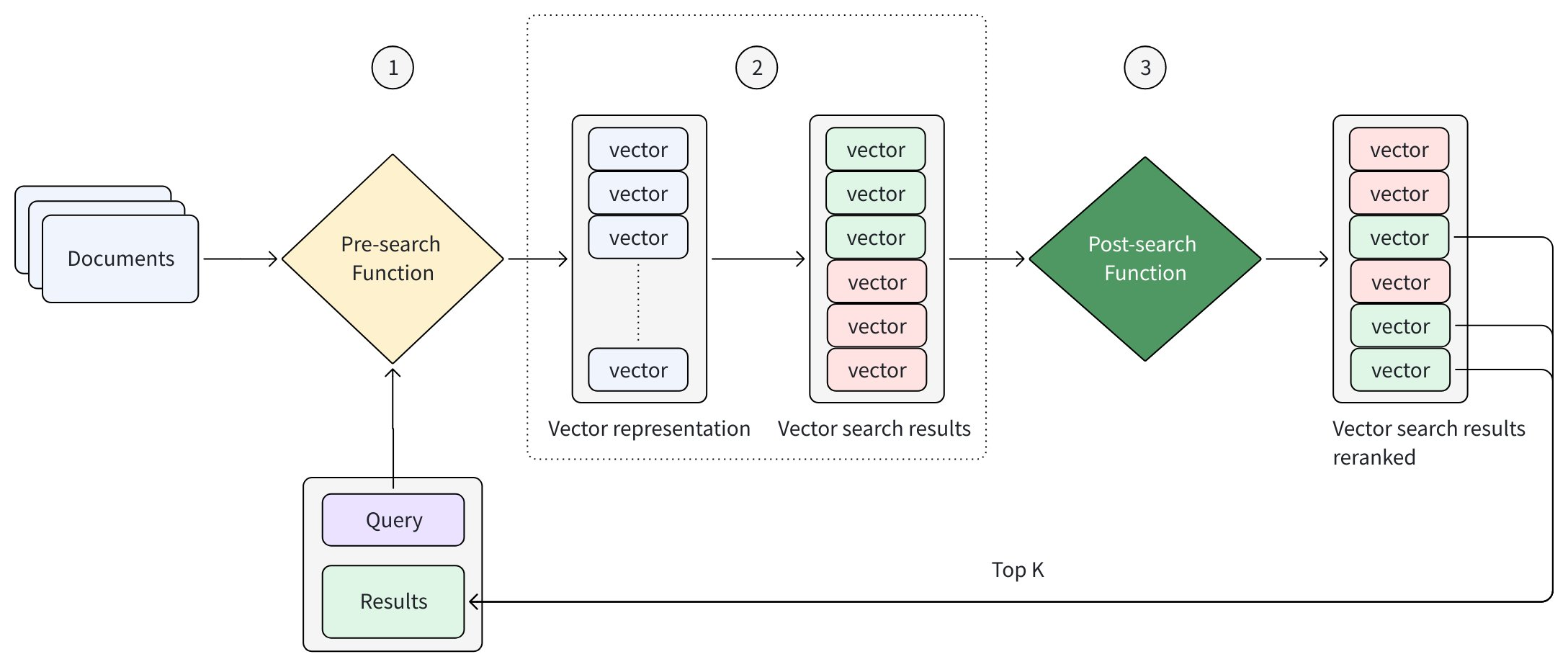

The following diagram provides an abstraction of how Functions work in the search workflow.

Every search request follows the same high-level flow:

-

The Pre-search Function generates vector representations from input text

-

The search engine retrieves candidate results based on those vectors

-

(Optional) The Post-search Function reranks the retrieved candidates

Function categories

Functions in Zilliz Cloud are categorized based on when they run in the search workflow and what role they play. At a high level, Functions fall into two groups:

-

Pre-search Functions, which convert text into vector embeddings and determine candidate retrieval

-

Post-search Functions, which refine the ordering of retrieved candidates

Pre-search Functions: Convert text to vector embeddings

Pre-search Functions run before candidate retrieval. Their role is to convert raw text—both stored documents and incoming queries—into vector representations that the search engine uses to identify relevant candidates.

Different Pre-search Functions generate different types of embeddings, which directly affects how retrieval is performed.

The table below summarizes the available Pre-search Functions:

Function Type | Vector Type | Description | Typical Scenarios |

|---|---|---|---|

BM25 Function | Sparse embeddings | Computes lexical relevance based on term matching, term frequency, and document length normalization. Executes entirely within the database engine as a local mechanism; no model inference required. | Keyword-driven full text search, documentation and code search, and workloads where term matching, low latency, and deterministic behavior are critical. |

Model-based Embedding Functions | Dense embeddings | Encodes the semantic meaning of text using machine learning models, enabling similarity-based retrieval beyond exact keywords. Requires model inference via hosted models or third-party model services. | Semantic search, natural-language queries, Q&A and RAG pipelines, and use cases where conceptual similarity matters more than literal term overlap. |

All Pre-search Functions are applied consistently to both document data and query text, ensuring retrieval is performed within the same representation space.

Post-search Functions: Rerank candidate results

Post-search Functions are applied after candidate retrieval. Their purpose is to refine the ranking of retrieved candidates without adding or removing items from the candidate set.

These functions operate exclusively on the results returned by the search stage and apply additional ranking logic or relevance signals to improve result quality. They do not affect indexing, retrieval, or filtering behavior—only the final ordering of results.

The table below summarizes the available Post-search Functions:

Function Type | Operates On | Description | Typical Scenarios |

|---|---|---|---|

Hybrid Search Rankers | Multiple result sets retrieved from hybrid search | Combine and rebalance results retrieved from different retrieval strategies using methods such as weighted ranking or reciprocal rank fusion (RRF). | Hybrid search scenarios that combine semantic and lexical retrieval and require balanced result fusion. |

Rule-based Rankers | Candidate results from single-vector or hybrid search | Adjust ranking based on predefined rules or numeric signals, such as boosting or decay-based scoring. | Business-driven ranking logic, recency or popularity boosts, and scenarios requiring predictable, non-ML reranking. |

Model-based Rankers | Candidate results from single-vector or hybrid search | Use machine learning models to evaluate relevance and reorder results based on learned or semantic signals. | Intelligent reranking, relevance refinement using semantic understanding, and LLM-based relevance evaluation. |

Because Post-search Functions operate only on retrieved candidates, they are refinement steps that affect result order but not retrieval scope.

Understand model inference

In the Function-based architecture of Zilliz Cloud, model inference is not a standalone concept or execution stage. Instead, it is an implementation detail used by specific Function types when machine learning-based signals are required.

Where model inference fits in

Model inference refers to the runtime execution of machine learning models to generate semantic signals, such as:

-

Dense vector embeddings derived from text

-

Relevance scores used to rerank search results

Within Zilliz Cloud, model inference is used only by model-based functions, including:

-

Model-based Pre-search Functions, which convert raw text into dense vector embeddings

-

Model-based Rankers, which evaluate relevance and reorder retrieved candidates

Other Functions, such as the BM25 Function and rule-based rankers, run entirely within the database engine and do not require model inference.

Sources of model inference

Zilliz Cloud supports two sources of model inference. Both provide model-based capabilities, but differ in how models are provisioned and managed:

Aspect | Hosted Models | Third-Party Model Services |

|---|---|---|

Where models run | Inside Zilliz Cloud | External model provider (OpenAI, Voyage AI, etc.) |

Who manages models | Zilliz Cloud | External model provider |

How access is set up | See Hosted Models | Through model provider integration on your own |

Credentials | Provided during onboarding with Zilliz Cloud support | Provided by you (for example, API keys) |

Typical use cases | Tightly integrated or customized deployments | Using standard models from established providers |

Setup complexity | Higher (requires onboarding) | Lower (connect your existing API keys) |

Choose Hosted Models if you need:

-

Tight integration with Zilliz Cloud (single vendor, unified support)

-

Custom model fine-tuning or specialized models

-

Predictable performance and latency

-

Simplified credential management

Choose Third-Party Model Services if you:

-

Already have an existing relationship with a model provider

-

Want to leverage the latest models from providers like OpenAI

-

Prefer flexibility to switch providers

Supported model providers

Zilliz Cloud integrates with leading model providers that offer different capabilities. The table below shows which providers support text embedding and reranking:

Provider availability and supported capabilities may vary by region and release. Refer to provider-specific documentation for the most up-to-date information.

Model Provider | Text Embedding | Reranking |

|---|---|---|

OpenAI | No | |

Voyage AI | ||

Cohere |